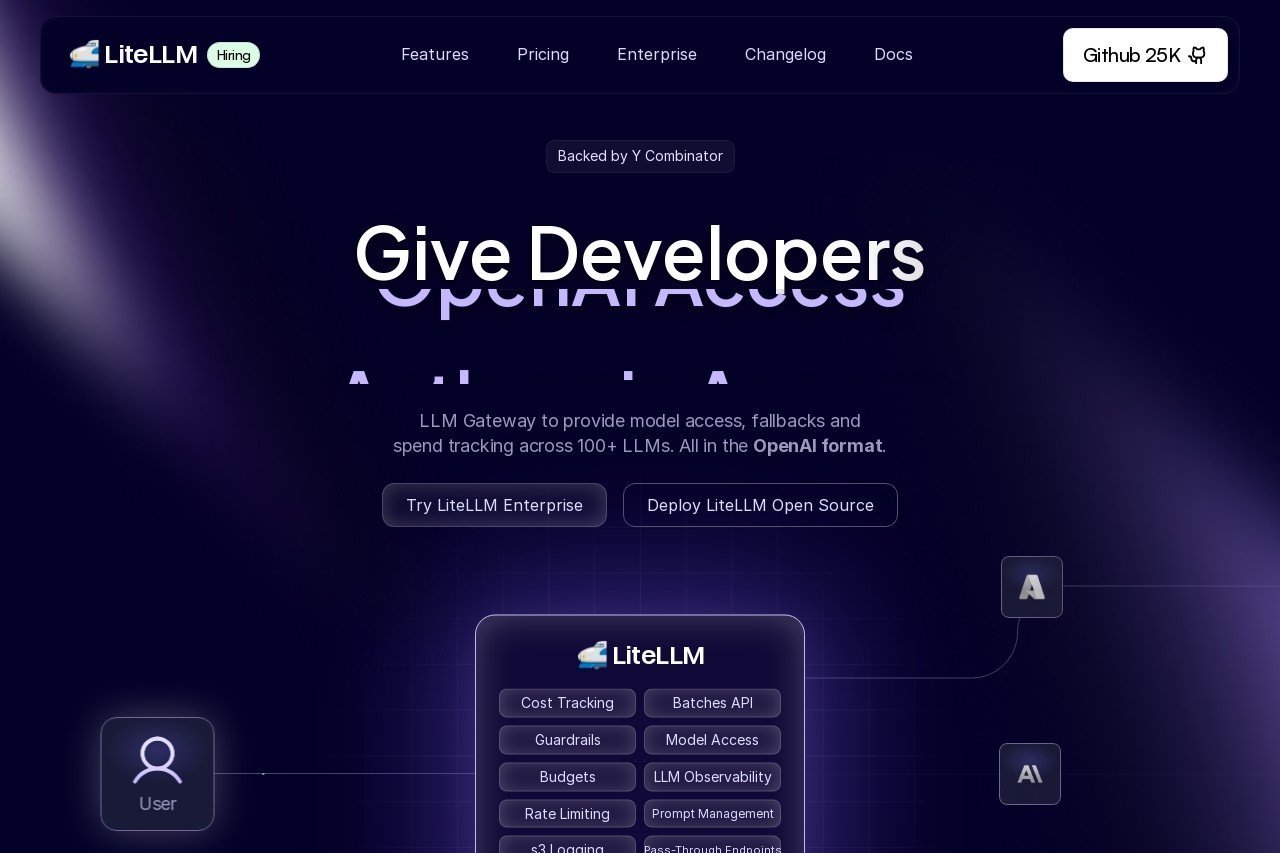

A unified LLM gateway providing authentication, load balancing, and spend tracking for 100+ large language models through OpenAI-compatible APIs.

LiteLLM

Introduction to LiteLLM

LiteLLM is a powerful unified gateway designed to simplify interactions with over 100 large language models (LLMs). By offering OpenAI-compatible APIs, it enables developers and businesses to integrate diverse AI capabilities seamlessly into their applications without managing complex integrations for each model.

Key Features

- Unified API: Access multiple LLMs (e.g., Anthropic, Cohere, Hugging Face) using a single OpenAI-style endpoint.

- Authentication: Secure your API endpoints with customizable key management and access controls.

- Load Balancing: Distribute requests across multiple models or instances to ensure high availability and performance.

- Spend Tracking: Monitor and analyze usage costs in real-time with detailed logging and budgeting tools.

Benefits

LiteLLM reduces development overhead by standardizing API calls across different providers. It enhances reliability through intelligent load balancing and provides cost transparency with spend tracking. Its flexibility supports rapid prototyping and scaling for AI-driven projects.

Ideal Users

LiteLLM is perfect for developers, AI researchers, and enterprises building applications that require multiple LLM integrations. It benefits startups seeking cost-effective scalability and large organizations needing centralized management for AI services.

Frequently Asked Questions

- Is LiteLLM open source? Yes, LiteLLM is open-source and community-driven.

- Does it support custom models? Absolutely, you can integrate privately hosted models via the same API.

- How does spend tracking work? It logs token usage and costs per model, providing insights via dashboards or exports.