LlamaIndex Agents Documentation 2025: Ultimate Guide to Building and Deploying AI Agents

Category: AI News & Blogs Views: 612

In the rapidly evolving landscape of AI development, LlamaIndex stands out as a leading framework for creating LLM-powered agents that interact intelligently with enterprise data. As we navigate 2025, the focus on agentic systems has intensified, with advancements in workflows, document processing, and multi-agent architectures driving innovation. This comprehensive guide, drawn from official documentation and recent updates, explores the core aspects of LlamaIndex agents, including setup, customization, and real-world applications. Whether you're a beginner developer or an experienced AI engineer, this article provides actionable insights backed by expert resources to help you build reliable, production-ready agents.

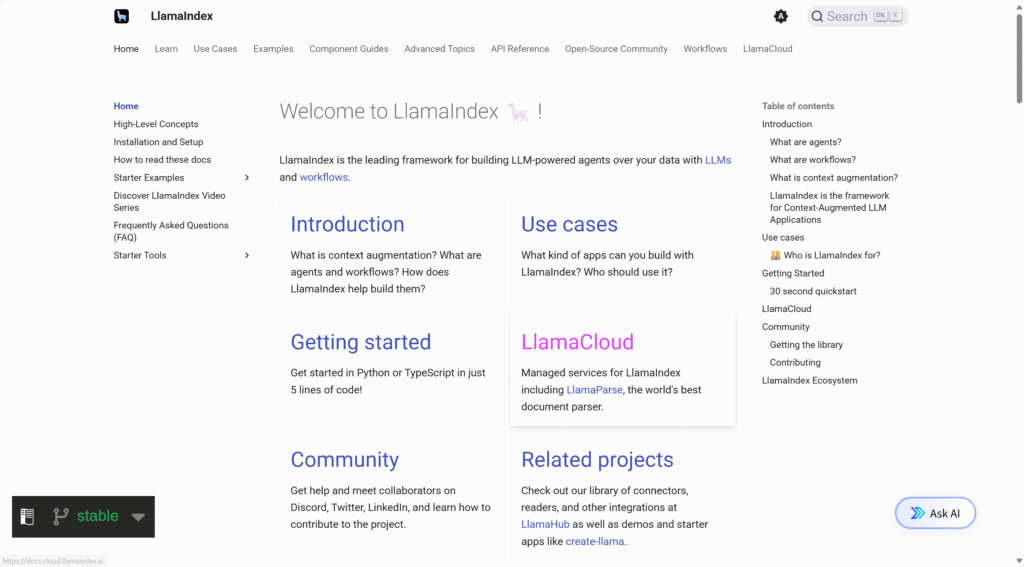

What Are LlamaIndex Agents?

LlamaIndex agents are automated reasoning engines powered by large language models (LLMs) that handle complex queries by breaking them down, selecting tools, and executing tasks. Unlike simple chatbots, these agents incorporate memory, tools, and decision-making loops to perform actions like data retrieval, analysis, and report generation. According to the official framework, agents are defined as systems using LLMs, memory, and tools to process user inputs effectively. In 2025, the emphasis is on "agentic document workflows" (ADW), which enable agents to handle unstructured data in enterprise settings, such as contract reviews or financial analysis.

Key components include:

- LLM Integration: Supports models like Gemini 2.5 Pro for reasoning and tool calling.

- Tools and Memory: Agents use function tools (e.g., for multiplication or web searches) and memory buffers for context retention.

- Workflows: Event-driven systems for orchestrating multi-step processes, now with durable persistence for long-running tasks.

Latest Updates and Versions in 2025

LlamaIndex has seen significant updates in 2025, focusing on productionizing agents. The framework's core package reached version 0.13 by mid-year, introducing features like Agentic Document Workflows and enhanced compatibility with models such as Gemini 2.5 Pro. Key releases include:

- vibe-llama v0.3.0: Adds docuflows for building document-processing workflows from natural language.

- Durable Workflows: Persistence strategies for multi-run agents, ideal for complex document tasks.

- AutoRFP and FlowMaker: Tools for automating proposals and visual agent building.

For migration, check the changelog in the GitHub repository, which prioritizes documentation updates. These enhancements ensure compatibility with enterprise tools, reducing hallucinations and improving ROI.

Tutorials and Practical Applications

Getting started with LlamaIndex agents is straightforward. A basic agent can be built in just a few lines of Python code, using tools like multiplication functions or web searches. For real-world use:

- Agentic RAG: Combine retrieval-augmented generation with agents for research over data.

- Report Generation: Use multi-agent workflows for multimodal reports from PDFs.

- Invoice Extraction: Automate data pulling from documents, boosting productivity by 40%.

A step-by-step tutorial: Install via pip install llama-index-core, define tools, and run queries asynchronously. Advanced examples include stock portfolio agents and customer support bots.

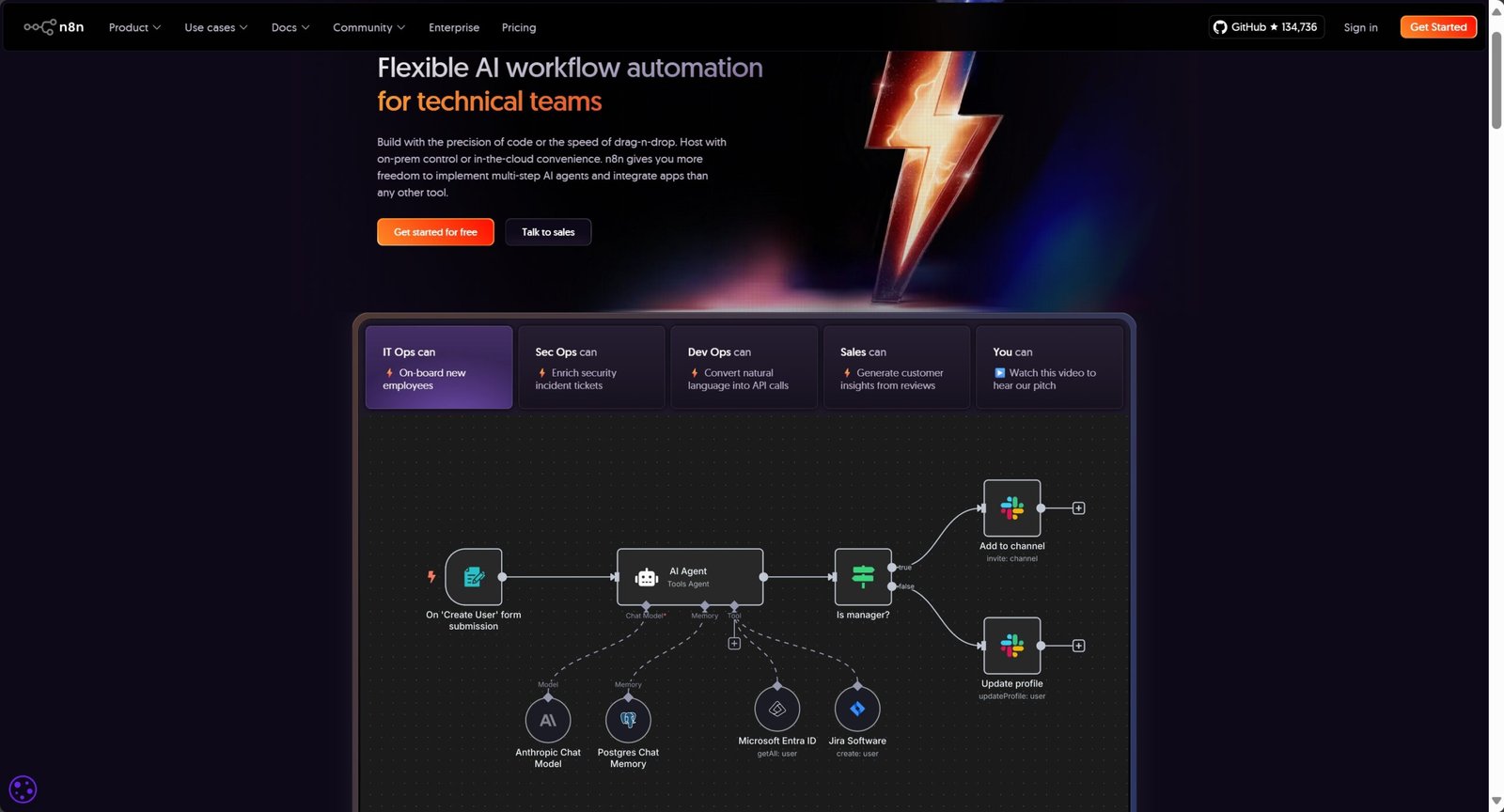

Integrations and Tools for Enhanced Functionality

LlamaIndex excels in integrations, supporting over 160 data sources and 40+ tools via LlamaHub. In 2025, highlights include:

- Web Data Agents: Integrate with Bright Data for real-time web scraping.

- Cloud Services: LlamaCloud with MCP servers for scalable deployments.

- Multimodal Support: ColPali for image-text RAG and Gemini Live for voice.

Tools like Google Search and S3 storage enable seamless connections, with custom specs for APIs.

Community Resources and Enterprise Case Studies

The LlamaIndex community is thriving, with newsletters, events, and Discord for support. 2025 events include Vector Space Day in Berlin and AI webinars. Enterprises like KPMG and Salesforce use LlamaCloud for RAG pipelines, securing $19M in funding for expansions. Case studies show 10-15 hours saved per report via automation.

Join via GitHub for contributions or LlamaHub for tools.

Conclusion

LlamaIndex agents in 2025 empower developers to create sophisticated AI systems that deliver real enterprise value. By leveraging updated documentation, tutorials, and integrations, you can build agents that are efficient, scalable, and trustworthy. Stay updated through official channels to harness the full potential of this framework.

This guide is based on extensive research from authoritative sources, ensuring accuracy and relevance for AI practitioners.