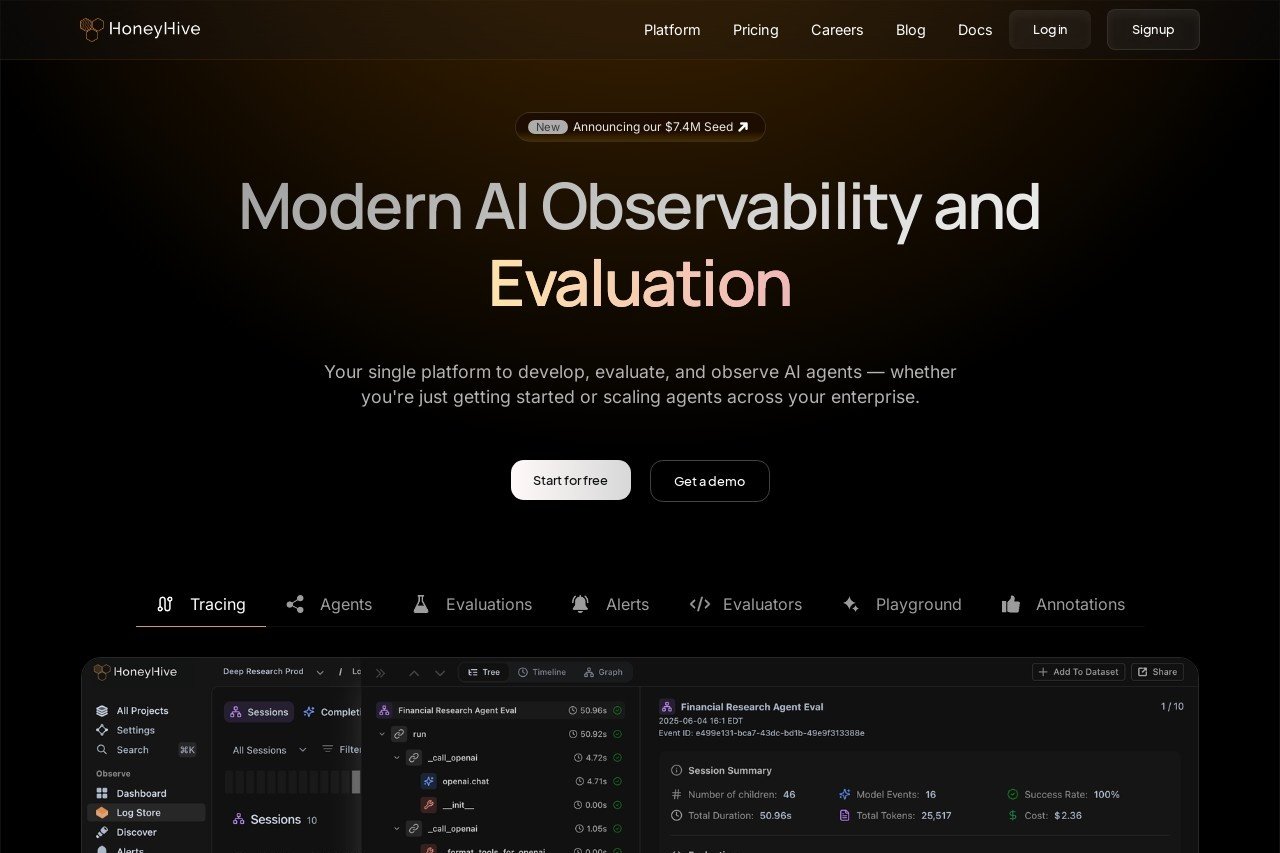

HoneyHive is a unified LLMOps platform offering AI evaluation, testing, observability, and collaborative prompt management for teams building LLM applications.

HoneyHive

Introduction

HoneyHive is a unified LLMOps platform designed to empower teams building applications with large language models (LLMs). It provides a comprehensive suite of tools that streamline the entire development lifecycle, from initial testing to production deployment and monitoring. By centralizing key workflows, HoneyHive enables teams to build more reliable, high-performing, and collaborative AI applications faster.

Key Features

The platform integrates several critical functionalities into a single, cohesive environment:

- AI Evaluation: Systematically test and benchmark your LLM's performance against custom metrics and datasets.

- Testing: Run rigorous experiments to compare different models, prompts, and parameters to identify the best configuration.

- Observability: Gain deep, real-time insights into your LLM's usage, costs, latency, and outputs in production.

- Collaborative Prompt Management: Version, manage, and iterate on prompts as a team, ensuring consistency and best practices.

Unique Advantages

HoneyHive stands out by offering a unified solution that breaks down silos between different stages of LLM development.

- End-to-End Workflow: Seamlessly connect evaluation, testing, and monitoring without switching between disparate tools.

- Data-Driven Decisions: Make informed choices about model deployment based on comprehensive quantitative data and user feedback.

- Enhanced Collaboration: Provide a shared workspace for engineers, researchers, and product managers to work together effectively.

- Production Reliability: Proactively detect issues like drifts, regressions, or unexpected outputs to maintain application quality.

Ideal Users

HoneyHive is built for teams and organizations that are serious about deploying LLMs in production.

- AI and Machine Learning Engineers building and fine-tuning LLM-powered applications.

- Product Managers who need to oversee and measure the performance and impact of AI features.

- Researchers and Data Scientists conducting experiments to advance their model's capabilities.

- DevOps and MLOps professionals tasked with ensuring the scalability, reliability, and observability of AI systems.

Frequently Asked Questions

What is LLMOps?

LLMOps (Large Language Model Operations) encompasses the practices, tools, and processes used to manage the lifecycle of LLM-powered applications, similar to MLOps but focused on the unique challenges of language models.

How does HoneyHive improve our workflow?

It consolidates evaluation, testing, and monitoring into one platform, reducing complexity, improving team collaboration, and accelerating the iteration cycle from development to production.

Can we use it with any LLM?

Yes, HoneyHive is designed to be model-agnostic, working with a wide range of proprietary and open-source large language models.